One of my long-standing hobbies is home automation. Over the years, I’ve enjoyed connecting various devices throughout our house using the Home Assistant platform. This powerful open-source system lets me integrate technologies from different manufacturers without getting locked into proprietary ecosystems or relying on cloud services for basic functionality.

Recently, I’ve been curious about how the advances in generative AI might enhance my home automation setup. After discovering several interesting posts (here, here, and here) about a new Home Assistant integration called LLM Vision, I decided to give it a try. This integration allows you to send snapshots or video clips from security cameras to large language models for analysis – a perfect opportunity to learn about AI vision while potentially adding a useful security feature to my home.

Getting Started: Easy Setup

Installing LLM Vision was straightforward – as simple as adding any other custom integration through HACS (Home Assistant Community Store).

LLM Vision works with OpenAI, Anthropic, Grok, and Gemini. I opted for Google’s Gemini models. My choice was practical: they offer a free tier for limited usage, and I didn’t see the need to pay for model time on what was essentially an experimental project. Creating a Google AI Studio account and generating an API key took just a few minutes.

Building the Automation: A Four-Step Process

The automation was relatively simple, but involved several steps:

The Trigger: I used motion detection from my Unifi cameras but added a condition to only activate when nobody’s home. This prevents a flood of notifications about my own movements around the house. I considered adding a timeout to avoid repeated alerts when there’s continuous activity in view, like neighborhood kids playing in the yard.

triggers:

- type: motion

device_id: 9ed9b74fa9485dbc3895d1b264e96f04

entity_id: e079587d1a1f794825f8581e04d09762

domain: binary_sensor

trigger: device

conditions:

- condition: state

entity_id: person.will

state: not_homeThe Snapshot: The system captures an image from the video feed – not for AI analysis, but to include in notifications so I can quickly see what triggered the alert without opening the full video stream.

actions:

- action: camera.snapshot

metadata: {}

data:

filename: /config/www/snapshots/front-door-camera-snapshot.jpg

target:

entity_id: camera.front_door_doorbell_camera_highThe Analysis: Here’s where it gets interesting. I configured the LLM Vision “Stream Analyzer” service to use Gemini-1.5-pro and spent some time fine-tuning the prompt. Since this was meant to be both useful and somewhat entertaining, I landed on:”Describe what you see in one sentence. If you see a person, describe what they look like. If you see an animal, describe what it looks like. Be silly and playful with the descriptions. Ignore the dark object obscuring the view from the right.”That last instruction came after noticing the AI kept mentioning a “large dark object” in every description – which was just my storm door handle visible in the frame. The solution? Simply ask the AI to ignore it. I set the Temperature parameter to 0.55 (on a scale of 0-1), finding a good balance between accuracy and creative description. I saved the resulting text summary from the LLM in the variable front_door_response to be used later in notifications.

- action: llmvision.stream_analyzer

metadata: {}

data:

remember: false

duration: 5

max_frames: 3

include_filename: true

target_width: 1280

detail: low

max_tokens: 75

expose_images: false

provider: [hidden]

image_entity:

- camera.front_door_doorbell_camera_medium

message: >-

Describe what you see in one sentence. If you see a person, describe

what they look like. If you see an animal, describe what it looks like.

Be silly and playful with the descriptions. Ignore the dark object

obscuring the view from the right.

model: gemini-1.5-pro

temperature: 0.55

response_variable: front_door_responseThe Notification: Finally, I set up notifications to be sent to all our devices, including the snapshot and a direct link to the live camera feed. One tap takes you straight to a full-screen view of what’s happening – a small but important usability feature. Notice that you can use the response_variable from the LLM Vision Stream Analyzer to pull the LLM text into the notification.

- action: notify.notify_all_devices

metadata: {}

data:

title: Motion in front of the house

message: "{{ front_door_response.response_text }}"

data:

image: /local/snapshots/front-door-camera-snapshot.jpg

actions:

- action: URI

title: Open Camera

icon: sfsymbols:video.circle.fill

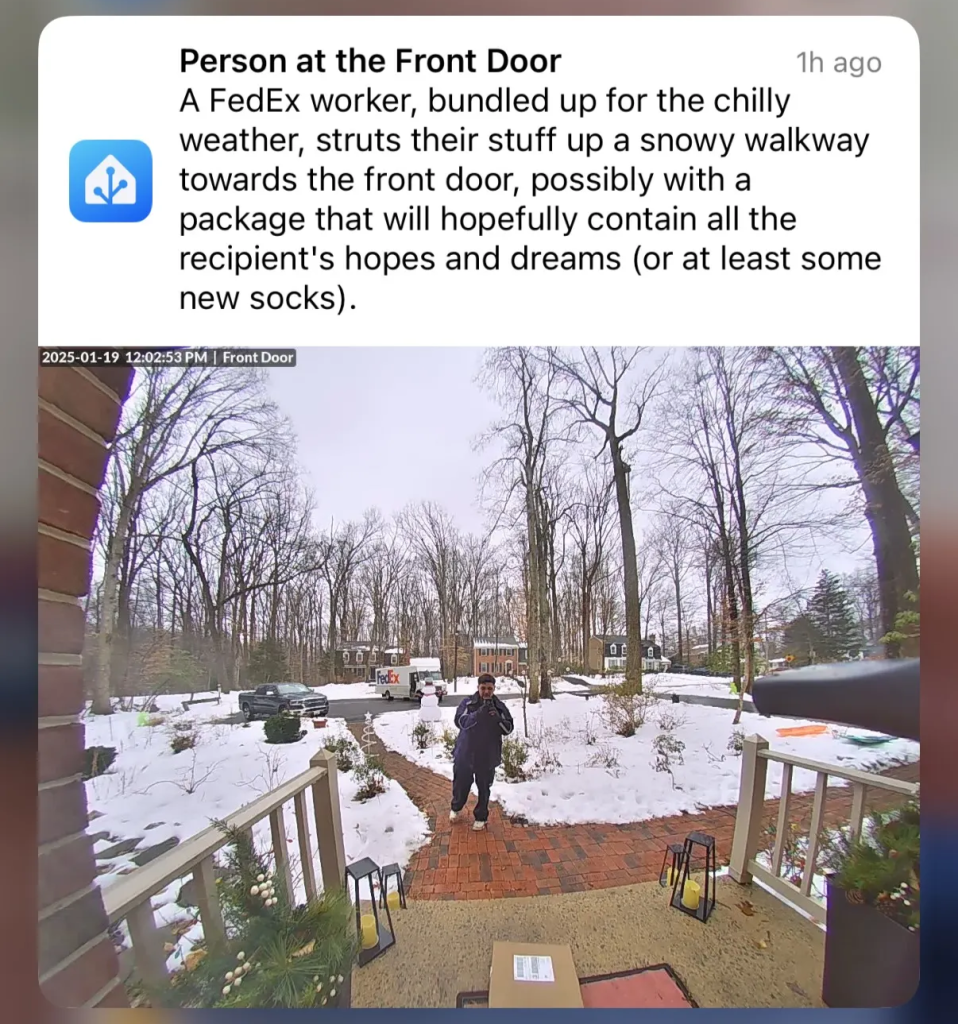

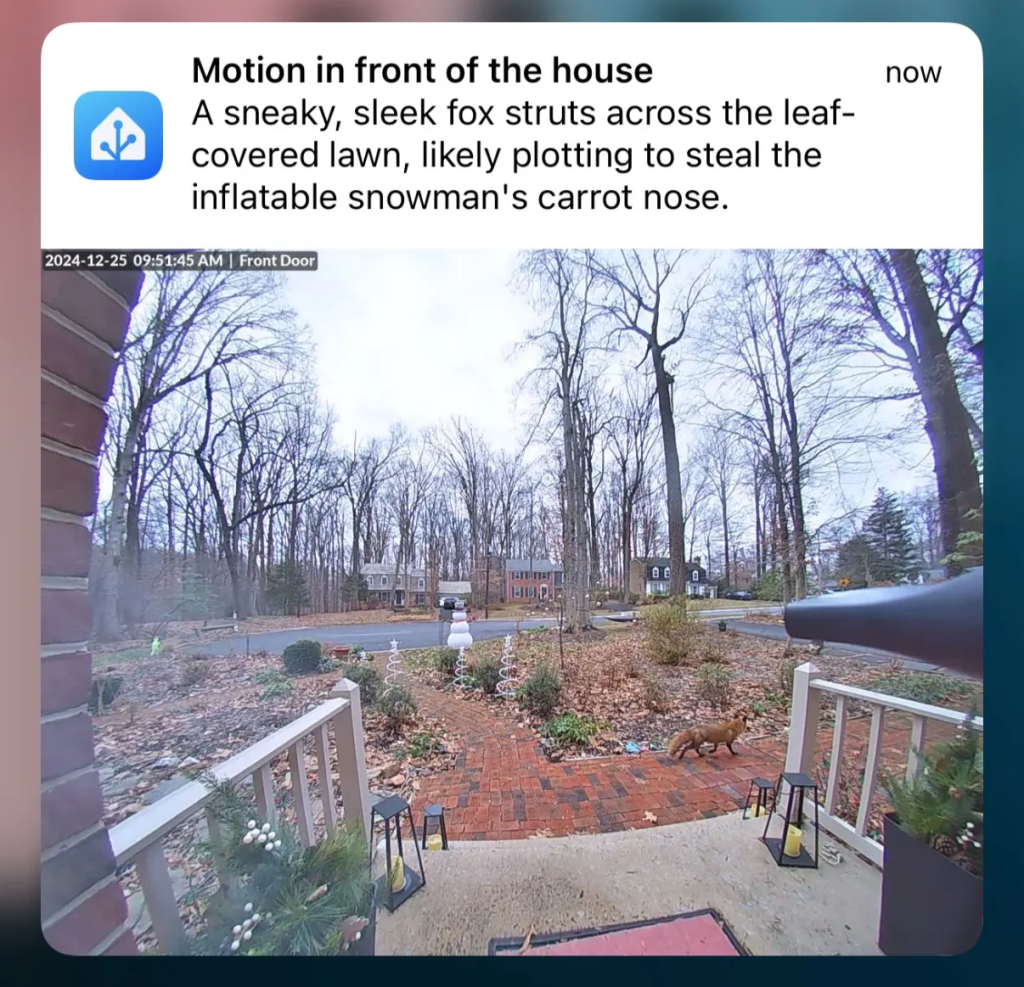

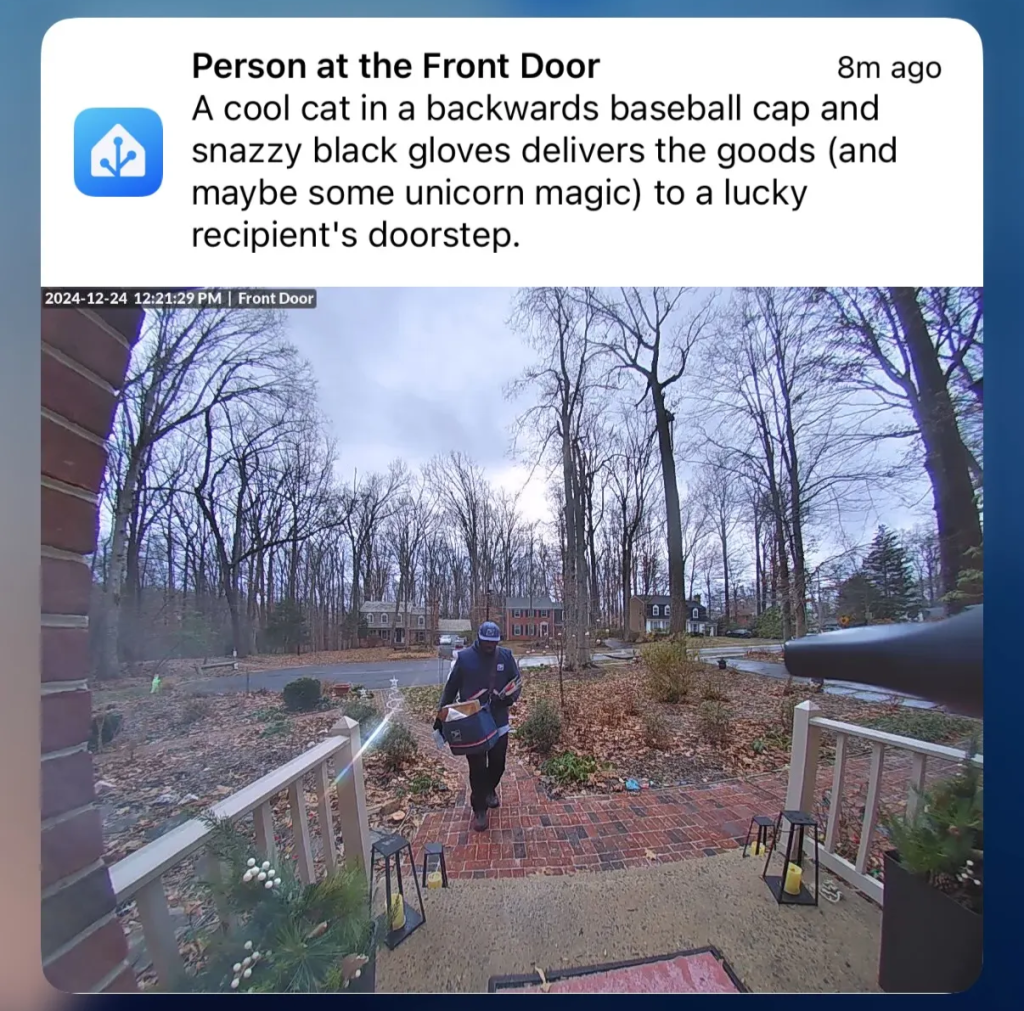

uri: /lovelace/front-door-cameraThe Results: Practical with a Touch of Personality

While I’m not sure this is my most essential home automation project, it’s certainly one of the more interesting ones. There’s genuine utility in receiving a descriptive text about camera activity without having to open the video feed to see what’s happening.

With a more straightforward prompt, this setup could provide efficient situational awareness for home security. Instead of generic “Motion detected” alerts, you could receive specific information like “Delivery person with package at front door” or “Stray cat wandering through the garden.”

For my implementation, I’ve kept the slightly more playful approach, which provides both security monitoring and the occasional smile when reviewing the AI’s descriptions. Some of the messages we’ve received have been surprisingly entertaining – the AI has a knack for coming up with creative ways to describe ordinary events. What might have been a boring “cat detected” alert becomes “A curious feline investigator is conducting a thorough inspection of your garden shrubs.”

This project demonstrates how new AI capabilities can be integrated into existing home automation systems to create features that are both practical and engaging. While not revolutionary, it’s a tangible glimpse into how our smart homes might interact with us more naturally in the future – combining utility with just enough personality to make technology feel a bit more human.

Here’s a handful of examples:

Have you incorporated AI into your home automation setup? I’d be interested to hear what applications you’ve found most valuable – or most entertaining – in the comments below.

Leave a Reply